Tesla and Uber Unlikely to Survive...

Discussion

RobDickinson said:

You don't even know how many cameras the cars have yet think yourself some kind of nn expert lol.

No, I'm not an expert in Teslas, but I've delivered machine learning solutions using petabytes of information for a global company, worked for another that develops performance telematics for race cars and currently work with vision processing systems for autonomous robotics amongst other things.So of course I'm interested in what Tesla does, and what Musk is claiming. This is world changing stuff, and whichever direction Tesla goes, it's going to have a huge impact on the industry. That doesn't mean we should credulously accept anything Musk says - and he really is stretching credulity at the moment.

Some people treat this stuff as magic, which means they have no context to decide whether one claim is any more difficult to deliver than another. Even in the industry, we're not very good at judging how difficult problems will be to solve - we've been promising AI from computers for most of my lifetime, and we're still no-where near cracking that one. I have no more idea than the next guy what secret sauce Tesla is going to unleash on the world - but I can try and decipher the technology they're working with and what their chances are.

Tuna said:

No, I'm not an expert in Teslas

Me either from this technical pov.It seems an invented narrative though, (1) they send data via cellular (2) this must be small because (3) it would cost them a lot

Thats a lot of assumptions, very much like back when people 'proved' they couldnt make more than 5000 cars a week because of paint.

(1) they dont need to send this data via cellular, most cars connect to wifi and it isnt time critical data. it would have to be stored on the car somewhere regardless.

(2) your likely not sending video or images just the results of the NN processing which would be a relatively small dataset, I assume if they needed more they could easily send more

(3) if its important to something like this cell data likely wont be a significant cost for the number of incidents/edge cases talked about anyhow.

Tuna said:

RobDickinson said:

You don't even know how many cameras the cars have yet think yourself some kind of nn expert lol.

No, I'm not an expert in Teslas, but I've delivered machine learning solutions using petabytes of information for a global company, worked for another that develops performance telematics for race cars and currently work with vision processing systems for autonomous robotics amongst other things.So of course I'm interested in what Tesla does, and what Musk is claiming. This is world changing stuff, and whichever direction Tesla goes, it's going to have a huge impact on the industry. That doesn't mean we should credulously accept anything Musk says - and he really is stretching credulity at the moment.

Some people treat this stuff as magic, which means they have no context to decide whether one claim is any more difficult to deliver than another. Even in the industry, we're not very good at judging how difficult problems will be to solve - we've been promising AI from computers for most of my lifetime, and we're still no-where near cracking that one. I have no more idea than the next guy what secret sauce Tesla is going to unleash on the world - but I can try and decipher the technology they're working with and what their chances are.

It was interesting as he was describing asking the fleet for particular graphics, bikes attached to the back or cars being one. Are you saying that clever as this may be, the neural net can’t possibly learn enough without uploading vastly more data than currently is?

DJP31 said:

I was impressed by Karpathy, but that was easy done as I know nothing about neural nets. I am fascinated when my car uploads X MB of data - 305 to be precise over the last 7 days according to my Google hub. I’ve driven 440 miles in that time, a mix of usual commuting and a longish motorway trip.

It was interesting as he was describing asking the fleet for particular graphics, bikes attached to the back or cars being one. Are you saying that clever as this may be, the neural net can’t possibly learn enough without uploading vastly more data than currently is?

How do you know you have a picture of a bike attached to the back of a car without already being able to know if it’s a bike attached to the back of a car? Otherwise you have to send lots of pictures if cars.It was interesting as he was describing asking the fleet for particular graphics, bikes attached to the back or cars being one. Are you saying that clever as this may be, the neural net can’t possibly learn enough without uploading vastly more data than currently is?

You do t need to be an expert in what Tesla is doing, just an expert in this type of problem solving. 305 meg.. 440 miles.. less than 1 meg a mile, a mile takes typically more than a minute to drive, we’re talking 10k of data per second on average, thats not much to learn from.

Back to genuine FSD, what happens when a camera gets splashed with mud? My rear camera is forever getting dirty to the point of being unusable,

Heres Johnny said:

How do you know you have a picture of a bike attached to the back of a car without already being able to know if it’s a bike attached to the back of a car? Otherwise you have to send lots of pictures if cars.

You do t need to be an expert in what Tesla is doing, just an expert in this type of problem solving. 305 meg.. 440 miles.. less than 1 meg a mile, a mile takes typically more than a minute to drive, we’re talking 10k of data per second on average, thats not much to learn from.

Back to genuine FSD, what happens when a camera gets splashed with mud? My rear camera is forever getting dirty to the point of being unusable,

Why are you working it out per mile? Pointless. You do t need to be an expert in what Tesla is doing, just an expert in this type of problem solving. 305 meg.. 440 miles.. less than 1 meg a mile, a mile takes typically more than a minute to drive, we’re talking 10k of data per second on average, thats not much to learn from.

Back to genuine FSD, what happens when a camera gets splashed with mud? My rear camera is forever getting dirty to the point of being unusable,

It'll keep and send data only when it needs to, on incidents or vehicles that don't match what it expects

DJP31 said:

It was interesting as he was describing asking the fleet for particular graphics, bikes attached to the back or cars being one. Are you saying that clever as this may be, the neural net can’t possibly learn enough without uploading vastly more data than currently is?

There are far smarter people than I am working in this field, so I'm sure Tesla has better ideas than I have on this.However, Neural nets are interesting for how they learn. They pick up on signals that are strongly correlated with the information they're designed to extract. The problem is that those signals can be quite unexpected. The legendary story of early machine vision was a project for the military that was intended to spot tanks hidden in dense vegetation. They had a huge (for the time) training set of aerial photos of tanks hidden amongst trees, and then another set of empty woodland. In the lab, the neural network trained up to a really high success rate of identifying the pictures containing tanks.

Then they tested it in the field. It was a disaster. Almost 100% failure rate. They couldn't figure out what had gone wrong. Until someone realised that the training set had been obtained at great expense by getting the army to park their tanks in a convenient wood over a single day. A single sunny day. All the other data had been collected on normal grey days. So the neural network had learned that if the picture was sunny, there was a tank in it.

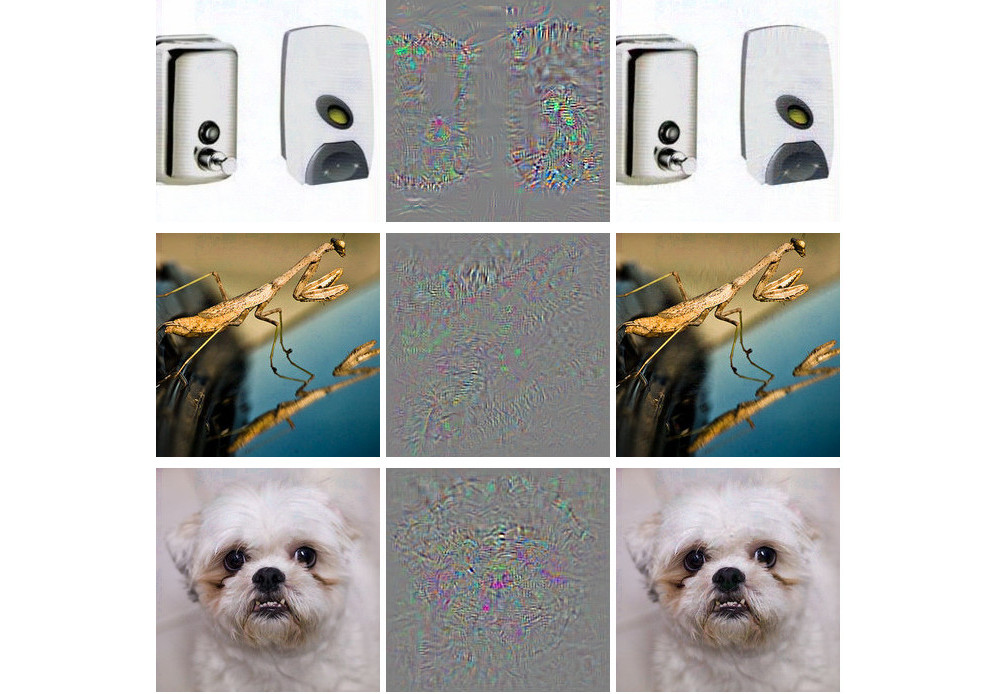

Though things have moved on significantly, neural nets are still a bit of a party trick. Understanding what they're picking up on to give a specific output is not easy - and sometimes it can be as much as a single pixel that causes a result. The study of adversarial images is still relatively new, but there have been some dramatic studies. Placing small stickers in a scene that (to a human) make no difference can completely fool a neural network into 'seeing' something completely wrong. And if stickers can do that, then so might a piece of gum, or a dirty lens, or a stray reflection.

This is from a study from a few years back. The images on the left were correctly identified by a neural net. The centre column shows the 'error margin' for the identification, which is added back in, to create the images on the right. To a human, each pair of images look pretty much identical. To the neural net though, all the images on the right appeared to be pictures of ostriches.

In the case of Tesla, spotting these adversarial images is a real challenge. Google (Waymo), who are leaders in machine learning have said that some recognition tasks, like correctly identifying traffic lights, are essentially still unsolved. Sure, in good conditions everything works, but what is hard to classify is what might 'fool' the network into making a wrong decision. A picture of a traffic light on a billboard? A pedestrian in a bright green jacket? A reflection of a light on another car's windscreen?

Which is why there is a puzzle over how Tesla are addressing the training problem. You can't ask the cars to identify misdiagnosed images, because how would they know they're misdiagnosed? "Show me pictures where you didn't see a pedestrian" is clearly a non-starter. That's rather trivialising the problem, but you get the idea. Because Tesla is completely relying on machine vision for FSD, they have to be really, really sure it's robust. And how do you do that if you're uploading at most an image set every minute or so? Try closing your eyes for sixty seconds when you're driving (no, don't!) and tell me you've not missed something important.

As I say, this is a gross simplification, and it's clear that great strides have been made towards some level of autonomy. But if Musk is saying FSD is not happening this year, then it means the problems are not even remotely solved yet, despite the video that neatly avoids making any claims.

Tuna said:

REALIST123 said:

I neither know, nor (if im honest) care much either way about autonomous cars from Tesla or anyone else.

What I do wonder is why Musk doesn't put his efforts into building better cars for an affordable price. Surely that would generate sales quicker than making nebulous promises of something most don’t care about?

From what I understand, the announcements weren't for the benefit of his customers - they were purely there to keep investment coming in. Musk needs to keep investors interested far more than he needs sales at the moment, particularly when he seems to be resource constrained on manufacture.What I do wonder is why Musk doesn't put his efforts into building better cars for an affordable price. Surely that would generate sales quicker than making nebulous promises of something most don’t care about?

Interesting part rework of the model s, with the 3 rear motor in the front gets 370 mile rating and 400 at cruising speed.

https://www.motortrend.com/cars/tesla/model-s/2019...

https://www.motortrend.com/cars/tesla/model-s/2019...

RobDickinson said:

Standard long range model s does 0-60 in 3.7 now too, its not even the performance model.

They have basically made a great car better, through nothing but efficiency changes. Imagine the range boost when the new battery pack arrives.....But am sure the resident Tesla haters will be along soon to comment on some negative aspect of why more efficient cars is a bad idea

.

.RobDickinson said:

Heres Johnny said:

How do you know you have a picture of a bike attached to the back of a car without already being able to know if it’s a bike attached to the back of a car? Otherwise you have to send lots of pictures if cars.

You do t need to be an expert in what Tesla is doing, just an expert in this type of problem solving. 305 meg.. 440 miles.. less than 1 meg a mile, a mile takes typically more than a minute to drive, we’re talking 10k of data per second on average, thats not much to learn from.

Back to genuine FSD, what happens when a camera gets splashed with mud? My rear camera is forever getting dirty to the point of being unusable,

Why are you working it out per mile? Pointless. You do t need to be an expert in what Tesla is doing, just an expert in this type of problem solving. 305 meg.. 440 miles.. less than 1 meg a mile, a mile takes typically more than a minute to drive, we’re talking 10k of data per second on average, thats not much to learn from.

Back to genuine FSD, what happens when a camera gets splashed with mud? My rear camera is forever getting dirty to the point of being unusable,

It'll keep and send data only when it needs to, on incidents or vehicles that don't match what it expects

Take the scenario they demo'd of the car in an adjacent lane twitching - if they only look at the examples of when the car did actually move into your lane they can not remove all the behaviour where the car didn't. Its like saying we're going to look at all the people in the world that have diabetes by only (and thats the key point, ONLY) looking at people with diabetes and guess what - they pretty much all have two legs and two arms... not sure thats conclusive. So you end up with spurious detection because you don't remove the noise backdrop you need to factor out. I suspect this is the reason why Tesla get so much phantom braking for bridges because they've not worked out bridges as drivers never brake for them, and a bridge might look like a lorry across the road. They're trying to get around this by tuning their models in silent mode but thats a long old process - guess what, diabetes has nothing to do with 2 arms and legs.. now what do we look for?

Low data upload means there's only a very few select cases they're harvesting otherwise they're just not confirming enough events. The scenario of detecting if a car is about to enter your lane.. and I think they said they go back 5 seconds worth of data - 5 seconds of video from multiple cameras... thats going to be a couple of meg just for that one scenario and they need to be running hundreds of scenarios... was that a car braking in front, was that a car turning, was that a bridge, a pedestrian about to step into the road, a traffic cone, a junction, a car closing too quickly behind...

Gassing Station | EV and Alternative Fuels | Top of Page | What's New | My Stuff